It always feels like somebody’s watching me...

Today’s video security technology can provide impressive detailed surveillance in many ways, but system integrators are still looking for better ways of interpreting the detailed night and day images these modern tools provide. Video analytics, image fusion, and high-definition capability are just some of the methods they are developing.

By John McHale

Video surveillance for homeland security and military installations consists of much more than a slovenly guard with several monitors and an endless supply of coffee.

The threats the nation faces today are more varied and complicated than ever before and detailed video analysis is needed in addition to the naked eye watching the video monitor.

There are many different tools for surveillance users, such as infrared–short wave and long wave, image fusion, satellite links, and video streamed from unmanned aerial systems (UASs).

The way to bring all that together is through video analytics, says Charlie Morrison, director of full-motion video solutions at Lockheed Martin Information Systems and Global Services in Gaithersburg, Md. It is the idea of tracking objects and performing object recognition, he explains.

“There is so much information out there,” that it needs to be organized and properly analyzed for the surveillance operator so he is not overloaded, Morrison continues. This technology is already out there in the commercial world, he says.

Tomorrow’s video surveillance screens will resemble the television feeds of ESPN and CNBC where sports scores and stock tickers are streaming across the bottom of the screen, Morrison says. There will be “multi-intelligence collected within the video so that it will be more than a picture.”

Morrison’s team at Lockheed Martin along with engineers at Harris Corp. in Melbourne, Fla., are taking that technology and applying it to military surveillance and intelligence applications, Morrison says. “We’re looking to integrate commercial technology for DOD video.”

“Full-motion video has exceptional potential for intelligence collection and analysis,” says Jim Kohlhaas, Lockheed Martin’s vice president of spatial solutions. “Thousands of platforms are collecting important video intelligence every day. The challenge is to collect and catalog that huge volume of footage, and give analysts the tools they need to find, interpret, and share the critical intelligence that can be gleaned from that mountain of data.”

The main Lockheed Martin video analytics tool is called Audacity. It tags, sorts, and catalogs digital footage. According to a Lockheed Martin public release it also has intelligence tools, such as video mosaic creation, facial recognition, object tracking, and smart auto-alerts based around geospatial areas of interest.

Harris brings to the effort its Full-Motion Video Asset Management Engine, or FAME, which integrates video, chat, and audio directly into the video stream, according to the Lockheed release. The tool forms a digital architecture from an integrated processing and storage engine to provide the infrastructure for enhanced video streaming, according to the release.

“What we’ve done is take the best of FAME and Audacity and combine them together to that point that you cannot say which is doing what,” Morrison says.

Lockheed specializes in the “filtering of information from multi-intelligence sources” to analyze video and provide object recognition. The joint effort will focus on research and development of video capability and focus on analysis in real time and through archival footage, according to the Lockheed Martin release.

The team will also develop solutions for “cataloging, storing, and securely sharing video intelligence across organizational and geographic boundaries to include bandwidth constrained users,” according to the release.

More and more assets are being put out there, “infrared, day/night, vision, object recognition, etc., and we are providing the filter, Morrison says. The software will organize it for the operator to make his decision and reaction processes quicker and more efficient, he adds.

“We already have done a deployment” Morrison says. However, he declined to name where it was deployed or who is using their solution.

Improving decision time

The joint effort will bring surveillance operators ease of use through graphical user interfaces, Morrison says.

In a port security application, for example, operators will be able to access on their video screen not only the ship coming in, “but what it’s supposed to be carrying and what it actually is carrying,” says Tony Morelli, program manager at Lockheed Martin Information Systems and Global Services.

It will also provide a chat, which could include an audio chat between two commanders or operators chatting within the video stream, Morrison continues.

Archival analysis

Archiving today for video involves chopping up pieces of the video into 30-second to two-minute bits, Morrison says. This can take a long time in retrieval, especially when you are looking for a two-second frame, he adds.

It is akin to searching for a sentence in a search engine that only gives you the 10,000 Word document the sentence is located in, Morrison notes.

Meta tagging the data is much faster, Morrison says. For example, imagine a red Ford Mustang that is sitting outside a building, he says. An operator can search to see where that red Ford Mustang was three days ago, and then play out the video before and after the car appeared–much the same way your digital video recorder or Tivo allows you to go back and see what you missed” when stepping away from the TV.

This an example, of geospatial awareness, Morrison continues. The system conducts temporal (time), keyword, and geospatial searches, he adds.

Morrison notes that the solution does not search for spoken words, but rather for the transcribed texts of the audio data. Morrison elaborates with another red Ford Mustang car example. An operator can “search for any and all references to the red Ford Mustang he sees on his screen for the last 10 days and up it will come, he explains.

Interactive analysis

Down the road, the goal is to get more interactive with the video with touch-screen capability, Morrison says. Operators will be able to touch a piece of data in the stream to pull more detailed information–whether it is a person of interest or a car or even a chat between two commanders in the field.

One of the advantages “our solution will have is its ability to work with a variety of surveillance tools,” Morrison says. The Army, Navy, Air Force, and Marines all use different tools to exploit information and the joint Harris/Lockheed Martin solution can work with all of them, he adds. “The key to this is standardization,” Morrison says. If the users all use the same standards of metadata it easy to integrate no matter the tools involved, he explains. The video standards are set by the Motion Image Standards Body or MISB, Morrison notes.

The touch-screen capability is still years away, but in the background the system will flash information it thinks the operator may need based on his tendencies, such as past searches as well as what he is seeing on the screen, Morrison says. This is similar to how Web sites like Amazon.com analyze the buying purchases of customers to push other items to them to buy, he continues.

Panoramic image fusion

Extracting and analyzing video data will be more efficient if the video is actually capturing everything in view. Engineers and scientists at GE Fanuc Intelligent Platforms (Bracknell) Ltd. (formerly Octec Ltd.) in Bracknell, England, are looking at ways to create a panoramic view that processes video the way the human brain processes images seen by the human eye.

It is a matter of presenting it to the human eye in a physical manner because the human eye reacts to physical movement, says Larry Schafer, vice president of business development for GE Fanuc Intelligent Platforms (Bracknell). Currently most systems have so much symbology in displays, the operator is overwhelmed and cannot respond effectively.

GE Fanuc engineers figure if they can present the image in a more physical manner with less symbology it will be easier for the operator to determine what he is saying and make the proper decisions.

Schafer and his team are looking to re-create the way this information is presented by creating an array of cameras with several sensors active all the time, he says. “It is called distributed aperture sensing,” Schaffer adds.

In a way, it is not unlike a panoramic zoom camera, Schaffer says. The operator gets views from behind his head if he is out in the field in a tank or other vehicle, he adds.

The imagery is a form of image fusion, Schaffer says. Day and night imagery is overlaid in a 160-degree or 360-degree visual, he adds. Resulting imagery is much more contrast rich, providing the eye with the necessary physical stimuli, he says. This then “gets us back into data extraction since all sensors active all the time” essentially give the operator eyes in the back of his head, he continues. If there is an object of interest at his rear, he should be able to hand touch a display screen whether in a building or driving and get that data in real time, Schaffer explains.

“This creates a possible 3D environment,” Schaffer says. Peripheral vision becomes just as clear as what is seen straight ahead.

The video processing is enabled by GE Fanuc’s IMP20 video processing mezzanine card, which is an add-on module for the company’s ADEPT 104 and AIM12 automatic video trackers to provide the cards with a supplementary image fusion capability, according to a GE Fanuc data sheet.

The device’s image fusion algorithm produces faster execution times and reduced memory overheads, according to the data sheet. The IMP20 also has a “built-in warp engine that provides rotation, scaling, and translation for each video source to compensate for image distortion and misalignment between the imagers, reducing the need for accurate matching of imagers” while reducing total system cost.

It is true situational awareness enabled by overlaying thermal imagery with day pictures in image fusion then adding object recognition capability, Schaffer says.

SWIR

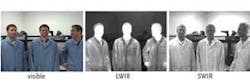

While shortwave infrared (SWIR) sensors have been around for a long time, this technology is still in demand for mission-critical surveillance applications.

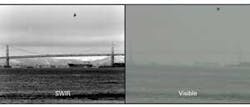

“The SWIR band has been shown to be valuable for imaging through atmospheric obscurants like fog, haze, dust, and smoke,” says Robert Struthers, director of sales and marketing at Goodrich ISR Systems (formerly Sensors Unlimited) in Princeton, N.J. “Fusing SWIR with long-wave or mid-wave thermal cameras is useful for driver vision enhancement, airborne wide-area persistent surveillance, and enhanced soldier portable night-vision imaging systems. Fundamentally, the thermal bands detect and the SWIR band identifies.

“High performance SWIR cameras will image urban night scenes without blooming or washout from intense light sources,” Struthers continues. “Because of the longer wavelength sensitivity than visible cameras or night-vision goggles (NVGs), InGaAs (Indium Gallium Arsenide) cameras combined with wavelength matching and covert illuminators can enable soldiers to avoid threats from NVG-equipped subjects. SWIR is also excellent for imaging during thermal crossover (near dawn/dusk), he adds.

“The SWIR spectral band produces images from reflected light, not temperature as thermal imagers,” Struthers explains. “These images are more recognizable than thermal spectral bands because they appear much like monochrome visible images providing target/biometric identification capability.

Goodrich’s SWIR indium gallium arsenide (InGaAs) cameras “provide the highest resolution available in the SWIR band: 640-by-512 pixel arrays,” Struthers says. “Most camera models are available in enclosed housings or as miniature open-frame modules for embedding into surveillance gimbals and small optical systems.”

Goodrich’s latest InGaAs camera is the SU640KTSX-1.7RT, which has high-sensitivity and a wide dynamic range. According to a Goodrich data sheet, it provides real-time, night-glow to daylight imaging in the SWIR wavelength spectrum for passive surveillance and use with lasers. The camera’s onboard Automatic Gain Control (AGC) enables image enhancement and built-in non-uniformity corrections (NUCs), according to the release. The Goodrich device also has an extended lower wavelength cutoff, enabling the camera to capture photons previously only possible with silicon-based imagers.

Funding from the Defense Advanced Research Projects Agency in Arlington, Va., is fueling Goodrich’s SWIR camera developments for reducing size, weight, and power for small unmanned platforms and portable, night-vision components, Struthers says. “The proliferation of SWIR cameras has spurred development of covert illuminators and specialty AR-coated lenses to optimize night-

vision capability for the warfighter.”

Digital and high definition in high demand in surveillance applications

Engineers at FLIR Systems in Portland, Ore., find that end-users of their technology want more and more digital capability and high-definition (HD) imagery.

“There are some systems out there that may have one digital HD sensor and the rest are standard-definition analog sensors,” says David Strong, vice president of marketing for FLIR government systems. Having an all-HD solution is “critical to provide long-range, high-resolution surveillance in all conditions, both day and night, to allow the users to perform their jobs more quickly and efficiently, and to cover more ground than previously possible.”

FLIR’s HD digital solution is the “Star SAFIRE HD system, which provides full high-definition imaging capability in a stabilized turret for airborne and maritime applications,” Strong says. “The thing about this system is that all of its sensors–infrared, zoom color TV, low-light TV, and long-range color TV–are digital high-definition sensors, and we maintain full digital image fidelity throughout our system.

“It is the only system that is all-digital, all-HD, all of the time,” Strong continues. He notes that while everyone wants the digital capability, users will have different environmental and operational requirements depending on the environment.

Airborne applications need stabilized long-range sensors, Strong says. “The land folks need light, hand-held sensors that last a long time on their batteries. They also need extremely reliable systems that can operate 24/7 in hot dusty climates. The maritime folks need systems that can withstand constant exposure to salt spray and still work reliably on long maritime missions with little available support.”

FLIR supplies the Star SAFIRE III to the U.S. Coast Guard for a range of platforms, including the HC-144A medium-range surveillance aircraft, the HC-130J long-range surveillance maritime patrol aircraft, and the National Security Cutter, Strong says.

“We also have more than 80 Star SAFIRE class systems operating with U.S. Customs and Border Protection (CBP) on P-3 Orions, Black Hawks, and AS350 helicopters, including the Star SAFIRE HD,” Strong continues. Last year FLIR won another CBP contract to provide the Recon III long-range, hand-held imagers for homeland security missions, he adds.

Video processor enables image fusion

Engineers at Sarnoff Corp. in Princeton, N.J., have combined in one real-time video processor the functionality and performance for image fusion that previously required several devices.

Sarnoff officials announced the Acadia II video processor at the SPIE Defense, Security, and Sensing show in Orlando, Fla., this spring.

The Acadia II provides enhanced vision for warfighters, says Orion White, field application engineer at Sarnoff. The device provides “three-band image fusion in high-definition resolution where the Acadia I only had two-band image fusion.”

An example of image fusion is combining a low-wave infrared image with a short-wave infrared image.

Processing functions such as contrast normalization, noise reduction, warping, have detection, target detection and tracking, video mosaics, etc., that previously would take several processors and components “now can be performed on one chip,” White says.

“With more processing power, smaller size, and less energy consumption,” designers can put their systems on Acadia II instead of the other way around, says Mark Clifton, vice president, products and services at Sarnoff.

The system uses four ARM quad-core processors, White says. The floating-point processors run at 300 megahertz with a low-power range between 1 and 4 watts–with four independent power-down regions, according to the Sarnoff product data sheet.

White notes that the Acadia line of processors was developed under a Defense Advanced Research Projects Agency (DARPA) program called Multispectral Adaptive Networked Tactical Imaging System (MANTIS) and has become an off-the-self product for Sarnoff.

According to DARPA’s Web site, the MANTIS program was created to “develop, integrate, and demonstrate a soldier-worn visualization system, consisting of a head-mounted multispectral sensor suite with a high-resolution display and a high-performance vision processor” or application-specific integrated circuit (ASIC), connected to a power supply and radio–worn and carried by the soldier.

The helmet-mounted processor should provide “digitally fused, multispectral video imagery in real time from the visible/near infrared (VNIR), the short-wave infrared (SWIR), and the long wave infrared (LWIR) helmet-mounted sensors”–all fused to be seen in real time in a variety of battlefield conditions, according to the DARPA Web site.

Other Sarnoff processor features include real-time video enhancement, stabilization, mosaicking, multi-sensor video fusion, stereo range estimation, and image detection, according to the Acadia II data sheet. Other Acadia II specifications include 1280 by 1024 RGB video output with on-screen display processor, three digital video input ports, and support for as much as 108 megahertz parallel video.

The Acadia II mezzanine board is a 3 by 2.5-inch printed circuit board with low-profile Samtec connectors, according to the Sarnoff data sheet. The device memory includes Flash and 1 gigabyte of DDR2 memory.

According to a Sarnoff public release, Acadia II is targeted at portable and wearable vision systems; security and surveillance platforms; manned and unmanned aerial and ground vehicles; small arms, border, and perimeter protection; and vision-aided GPS-denied navigation.

For additional information, visit Sarnoff Corp. online at http://www.sarnoff.com/products/acadia-video-processors.