The UAV video problem: using streaming video with unmanned aerial vehicles

By Tim Klassen

Unmanned aerial vehicles (UAVs) present different video handling requirements to traditional manned vehicles. These demands can be summarized as:

- 1) the number of video streams handled;

2) the criticality of the video data link to the success of the mission;

3) even greater space/weight/power limitations;

4) potentially more demands on distribution, such as several remote data consumers; and

5) varying and, at times, restrictive limitations on data-link bandwidth.

When it comes to adding video data streams in manned vehicles, normally only intra-vehicle distribution is required. For most of the video display need, this can usually be handled by adding wired connections for video sources as necessary and routing physical connections to display destinations. Video transmission to a ground station is either not needed at all or is implemented as an add-on that is non-critical to the safety or functionality of the vehicle. When additional video streams are installed in an unmanned vehicle, the data has to be encapsulated and transmitted to a ground station using a relatively low-bandwidth data link. This adds increasing pressure on the video capture, compression, and transmission solution implemented on the aircraft.

Additionally, in unmanned air vehicles, the criticality level of the video data is entirely different. For unmanned systems, video data constitutes the only “eyes-in-the-sky” and thus the only link that a ground crew may have with what is actually going on in the vicinity of an unmanned air vehicle.

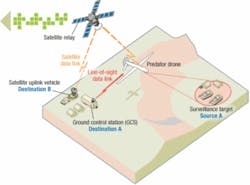

As it is not unusual for the unmanned air vehicle to be “flown” by a ground crew hundreds or even thousands of kilometers away, the video data link becomes absolutely critical to the safety and success of the mission. The figure on the following page shows a typical scenario with a target, UAV, and ground station configuration. For this arrangement to function safely and effectively, the quality, reliability, and latency of the video stream must be optimized. Also, in years the number and resolution of video sensors mounted on the unmanned vehicle have continued to increase, while the demands on quality, reliability, and latency remain unchanged.

With the addition of weaponized unmanned platforms, the need for more information and, in particular, more video information, has continued to multiply. It has resulted in a massive increase in the amount of video data that must be handled with increasing complexity by the processing hardware installed on the unmanned vehicle payload. Compounding the problem are the ever-present pressures on space, weight, and power (SWaP) with the desire for longer endurance units with greater functionality and lower fuel consumption.

The criticality and computational complexity presented by video data in an unmanned vehicle environment is therefore significant. Augmenting these challenges is the increasing need for flexible and accurate access to video data due to the migration of situational awareness techniques toward net-centric warfare. In this scenario, no longer is the UAV video data only transferred to one ground crew location responsible for controlling the mission.

Now, instead of one data source and one destination (as shown in the figure at right) there may be numerous “sources” as the number and types of image sensors increase, and many “destinations” as many end users request access to the overhead video sensors. Examples of destinations may include central mission control, mobile mission control, mobile ground forces (with handheld devices), or other manned air vehicles. For data that is acquired using UAV missions, the intended uses can span the range of the command, control, communications, computers, intelligence, surveillance, target acquisition, and reconnaissance (C4ISTAR) applications.

The destinations will need to view the data at different resolutions and with varying degrees of criticality. For instance, the pilot and crew of the UAV mission will have the most critical level of access, while an alternate ground destination or other air unit may only need data access for information gathering, not for mission safety. Such a broad range of technical criteria and data use possibilities provides significant challenges for video acquisition, compression, and transmission technologies.

The next level of complication in the UAV video-handling problem is the data link available to a UAV, which usually includes such options as line-of-sight radio link or satellite link. During the course of one mission, the bandwidth available for communication between the UAV and ground crew can vary significantly and unpredictably for many reasons.

As a UAV moves from line-of-sight data links to satellite data links, significant reduction in bandwidth occurs. When operating under satellite data link conditions, environmental or physical obstacles can also influence bandwidth available for data communication. A protocol which allows for automated quality adjustment under limited bandwidth conditions is greatly beneficial. Additionally, capability to remotely control bandwidth requirements or adjust video processing parameters to manage varying bandwidth conditions, enables basic functionality to be maintained under non-circumstances.

It is evident that on UAV platforms, demands on the data handling technology are increased in several dimensions including:

- 1) the increasing quantity of sensors,

2) the increasing quality of sensors (higher resolution),

3) the likelihood of a limited-bandwidth data link,

4) the need for several receivers (or consumers) to view the same data, and

5) the variability in bandwidth over the course of a mission. The complexity of the video handling and compression systems must also be balanced with the limitations of equipment space, weight, and power.

In summary, situational awareness with UAV platforms impose the following set of requirements on the video data handling system:

- 1) Rugged products that meet the demanding UAV environment

2) Efficient and configurable data handling (real-time parameter control)

3) Several input video format support (RS170, RGB, SMPTE-294M, and so on)

4) Efficient support for several resolutions

5) Maximum compression at excellent video quality

6) Minimized overall latency

The Codec to meet the need

To meet these demanding compression requirements, advances in video compression codecs are being implemented in rugged products. A suitable product must meet the extended temperature and conduction-cooled demands of the military and aerospace industry with performance and flexibility. A video compression codec, such as ITU-T H.263, H.264 (also known as MPEG-4 part 10, or AVC), or JPEG2000 is typically used. These algorithms are widely deployed, mature, and well supported on many platforms. JPEG2000, however, is less appropriate for UAV applications because it consumes more bandwidth than H.263 or H.264.

ITU-T H.263 and H.264 provide various methods to optimize bandwidth use and video quality in a given context. As in all compression algorithms, the goal is to remove redundant information while leaving all salient details untouched. If this goal is achieved then the algorithm is considered “lossless.” In practice, truly lossless compression techniques cannot compress data beyond 3 or 4 times the original image size, under best case conditions, and are frequently impractical in a real-world environment, particularly under non-data-link conditions.

The large majority of applications endure a certain “lossy” nature of the video codec to improve compression ratios to the 20:1 to 100:1 range. This is where full frame rate and full frame size video compression becomes practical for UAV applications. In order to achieve the necessary levels of data compression, it becomes imperative that a lossy algorithm is employed, but with a view to maintaining the maximum possible quality.

The ITU-T H.263 and H.264 codecs provide mechanisms to improve quality or improve compression as the bandwidth available is respectively increased or decreased. It is this flexibility that has resulted in broad acceptance in commercial and consumer applications, and which makes them uniquely suited to demanding UAV applications. One mechanism that is improving bandwidth use is that H.263 and H.264 can encode only regions of a frame that are notably different from a previous frame, and provide a movement vector for regions that have moved within the frame.

These “difference” frames are much smaller than frames which encode data for an entire frame every time, such as is the case with, for example, JPEG2000 codecs. This has the effect of greatly increasing compression without significant loss of quality, since all the pixels of a given frame do not need to be retransmitted from source to destination. Due to the nature of the JPEG2000 codec frame compression algorithm, reductions in bandwidth availability can severely limit the frame rate that can be achieved. For an equivalent low-bandwidth link, H.263 or H.264 compression provides quality.

An example of where the difference frame capability provides massive compression improvement is a UAV transmitting images of gradually changing landscapes where the differences between each frame are minimal, and most of it can be remapped in a frame with a movement vector from the previous frame. Lastly, the H.263 and H.264 codecs provide complete control of the frequency of sending integrated frames, where no previous information is required. The frequency of this “resync” frame can be adjusted from occurring every single frame, in which case no history is ever needed, to over one hundred frames. This level of flexibility of the codec makes it possible to adjust parameters to optimize for a specific system implementation or even “on-the-fly” as conditions change, or target characteristics change.

GE Fanuc Intelligent Platforms has deployed the TS-VID-MP4 product line into many manned and unmanned air vehicle applications which require video compression and transmission. The TS-VID-MP4, for example, implements the ITU-T H.263 codec, can capture up to four RS-170 video input streams, and can provide simultaneous compression for two of them in real time with extremely low latency–as low as three frames of latency (less than 100ms), depending on the data-link technology employed. The TS-VID-MP4 is available in a conduction-cooled, low-power, PMC package, including a complete client/server software solution for quick expansion of any UAV system.

Several consumers with one producer

To further increase system efficiency, an extension of the ITU-T H.264 algorithm, called the H.264 Scalable Video Codec (H.264 SVC), provides mechanisms for one encoded bitstream to be captured by numerous receivers at varying frame resolution and frame refresh rates. The control is handled at the receiver end such that the same video compression server can supply one Ethernet packet stream video at simultaneously high resolution and full frame rates and low resolution or reduced frame rates. This feature is extremely useful in the situational awareness context described earlier where several receivers require access, with varying data-link speeds and the ability to handle the decoding task complexity.

Tim Klassen is video products team leader at GE Fanuc Intelligent Platforms, an embedded computing specialist in Charlottesville, Va.