When it comes to aerospace and defense embedded computing systems, open standards continue to hold a firm place in development and deployment. These include Sensor Open Systems Architecture (SOSA), VITA, Hardware Open Systems Technologies (HOST), and Modular Open Systems Approach (MOSA).

The emerging SOSA standard, overseen by The Open Group in San Francisco, aims to enable military embedded designers to create new systems and make significant upgrades to existing systems much quicker than today’s technologies allow.

MOSA, as the name implies, focuses on modular approaches between systems, components, and platforms, and, like SOSA, aims to lower costs and allow the rapid deployment of new technology.

HOST uses commercial off-the-shelf (COTS) components in a small form factor, like VITA’s OpenVPX standard.

Justin Moll, vice president of sales and marketing at Pixus Technologies Inc. in Waterloo, Ontario, says that he is seeing a pair of trends in enclosed, embedded systems - the first is that the sector is being driven by SOSA and HOST.

“The backplanes for these systems typically require VITA 66 (optical) and VITA 67 (radio frequency) housings on the backplane PCB,” Moll says. “There is also a timing slot with routing implementations supporting the clocks and system management across the OpenVPX backplane. The speeds of the backplanes are also increasing to offer 40 Gigabit Ethernet speeds and even PCI Express Gen4 (16 gigabits per second) and 100 Gigabit Ethernet requirements. With higher power demands, advanced thermal management solutions are often required. The PSUs in the SOSA-based enclosures have shifted to 12-volt heavy versions. These enclosure systems also typically require a VPX Chassis manager.

“The second trend is the adoption of SpaceVPX and larger form factor OpenVPX boards,” Moll continues. “The VITA 78 spec provides the option for 6U x 220SpaceVPX aims to address interoperability like OpenVPX does, except its focus is, as its name suggests, space applications. SpaceVPX defines payload, switch, controller, and backplane module profiles.

This summer, Pixus announced the release of a development chassis that supports board depths of 160 millimeters for OpenVPX and 220 millimeters for SpaceVPX.

The open-frame chassis features as many as four slots at 1-inch pitch for each board depth type. The modular enclosure allows various board pitches to be used at 0.2-inch increments. Card guides to support air- and conduction-cooled boards are standard. There also are 220-millimeter-deep card guides that are wide enough to support extra-thick SpaceVPX conduction-cooled boards per the VITA 78 open-systems industry standard.

Beyond open

In addition to open standards like VITA and MOSA, experts at Curtiss-Wright Defense Solutions in Ashburn, Va., say that 2017’s “Third Offset” strategy introduced by then-Secretary of Defense Ashton Carter continues to drive trends in embedded systems.

The Third Offset strategy aims to use technology to win and deter military conflicts while acknowledging that other world powers are catching up to American technical superiority. Open standards are key to update embedded systems used by the American military quickly and affordably.

Jedynak says that because new and better components can slot in and slot out, the lifespan of embedded technologies is longer than in years previous.

“Some of the old way of doing things is sometimes akin to tearing down an office building and rebuilding it with all new everything, just because you need to upgrade the printer,” Jedynak jokes. “That’s not the right way to do it. It would be better if you knew that I’ve got an infrastructure in the building that allows me to yank that printer off with another one on another and take it away.”

Automated systems

Jedynak says that the holistic view of capabilities brought on by the Third Offset strategy enables systems to power smart, autonomous, or remotely piloted technologies, and is also helping to drive development in embedded systems.

“Autonomy is very hot — optionally-manned or automated systems,” Jedynak says. “Intelligently finding ways to take systems ... and turn it into something that is autonomous or at least remote controlled. What sensors do we need, what processors do we need? Machine learning — how do you make a platform smarter? At the end of the day, they’re applications that will make things better. How do we use the ‘smarts’ on the platform in a way that reduces warfighter burden? Think like Iron Man and “JARVIS” where there’s a lot of data coming in and it is triaged and the right information is at your fingertips.”

While SOSA and similar open standards allow for manufacturer-agnostic embedded systems for the military, Jedynak says that those standards are not hampering innovation in the industry.

“It’s not just a good idea; now it is what they’re supposed to be doing,” Jedynak says of open standards. “It’s a good thing for everybody around. You have to think a little harder when it comes to proprietary (designs), unique advantages. I used the metaphor that every automobile manufacturer doesn’t have their own custom roads out there, right? There’s a lot of room for automobile manufacturers to innovate. There’s something that makes sense to do completely on your own — you don’t need to have proprietary form factors and pin outs if your job is really about adding compute capability, right? For the most part, we need to focus on innovating on the performance.”

Eyes on performance

Experts at Mercury Systems in Andover, Mass., say they have spent more than a year powering-up their company’s approach to OpenVPX bus-and-board embedded systems by focusing on performance.

“So just as an example, if you think about the way that data centers operate, they’re dealing with big data problems,” McQuaid continues. “They have large data sets they have to manage. They have significant context switches. They’re doing different tasks at different times. They’re dealing with different programs and different processes over time and of course, their security concerns that come along with all of that. What we’ve tried to do...is take that data center architecture and deploy it.”

McQuaid says the shift to data center-powered embedded systems results in a “big data problem.”

“So what we’re talking about doing is leveraging that big data architecture out of the commercial world, out of the data center and building all of those same building blocks into OpenVPX and then building subsystems where we tie those together and are in an architecture that’s identical to the way the data center does business,” McQuaid says, noting that security and infrastructure make it impossible for forward-deployed warfighters to leverage commercial data processing centers like Amazon Web Services or Microsoft’s Azure. “And so what we’re doing is bringing that data center processing to the platform itself and doing it in an open-architecture friendly manner so that it can be easily refreshed over time.”

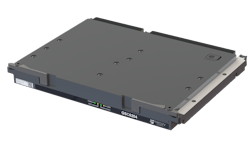

This summer, Mercury Systems announced the GSC6204 OpenVPX 6U NVIDIA Turing architecture-based GPU co-processing engine which aims at tackling compute-intensive tasks like artificial intelligence (AI), radar, electro-optical and infrared imagery, cognitive electronic warfare (EW), and sensor fusion applications that require high-performance computing capabilities closer to the sensor for effectiveness.

The module is powered by dual NVIDIA Quadro TU104 processors and incorporates NVIDIA’s NVLink high-speed direct GPU-to-GPU interconnect technology, and Mercury says it brings high-level parallel processing capability out of the data center.

Deployable AI

The VPX3-4925 module is a 3U OpenVPX GPGPU processor and features a NVIDIA Quadro Turing TU106 GPU that delivers 6.4 TFLOPS/TIPS performance. It provides 2304 CUDA cores, 288 Tensor Cores and 36 ray-tracing (RT) cores. For higher performance in size, weight, and power (SWaP)-constrained applications, the 3U VPX3-4935 module features a NVIDIA Quadro Turing TU104 GPU that delivers 11.2 TFLOPS/TIPS. The VPX3-4935’s higher core count includes 3072 CUDA Cores, 384 Tensor Cores, and 48 RT Cores. For more demanding applications, the 6U form factor VPX6-4955 (6144 CUDA cores, 768 Tensor Cores, 96 RT Cores) hosts dual TU104 GPUs for 22 TFLOPS/TIPS performance.

Mercury Systems also has its eyes on AI with its EnsembleSeries HDS6605 general-purpose processing 6U OpenVPX blade server with hardware-enabled support for artificial intelligence applications.

“With the new second generation Intel Xeon Scalable processors, Mercury’s OpenVPX blade servers deliver a huge boost to the industry’s ability to embed the big data processing capability required for new, smarter and autonomous military missions,” says Joe Plunkett, Mercury’s senior director and general manager for sensor processing solutions. “This next-generation compute capability delivers enhanced performance and power optimized for modern AI applications which enable our customers to take data center processing capability all the way to the tactical edge.”

The Intel second-generation Xeon scalable processors feature Intel Deep Learning Boost, which extends Intel Advanced Vector Extensions-512 to accelerate inference applications like speech recognition, image recognition, language translation, and object detection. Its new set of embedded accelerators — Vector Neural Network Instructions speed up dense computations characteristic of convolutional neural networks (CNNs) and deep neural networks (DNNs), delivering up to a 14-times improvement in inference performance compared to the first-generation Intel Xeon Scalable processor. Along with increased scalability via ultrapath interconnect (UPI), each blade provides up to 22 cores from a single 1.9GHz device, delivering 2.6 TFLOPS of general-purpose processing power.