By Steve Rood Goldman

Ethernet’s widespread use and longevity has resulted in an abundance of commercial-off-the-shelf (COTS) hardware and network application software for military use. Fast Ethernet (10/100-megabit-per-second) has been deployed for years and now Gigabit Ethernet is being designed into system upgrades and new weapon systems.

The switch to Gigabit Ethernet presents new challenges to achieve the benefits of increased throughput. When we talk about Ethernet, we generally include its layer 3 and layer 4 communications protocols: IP (Internet Protocol) and TCP (Transaction Control Protocol) or UDP (User Datagram Protocol.) These protocols traditionally have been handled in software because, at 10-megabits-per-second and 100-megabits-per-second speeds, processor overheads have been acceptable.

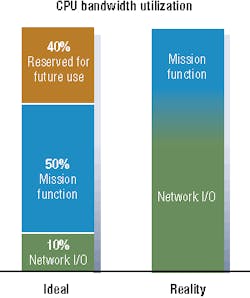

A rule-of-thumb used for TCP/IP is that every bit per second of network throughput consumes 1 Hz of processor bandwidth. As shown, 100-megabits-per-second throughput on a 1 GHz processor represents only 10 percent overhead. This leaves plenty of room for the mission function as well as reserved bandwidth. At gigabit speeds, TCP/IP processing represents significant overhead and can overwhelm the processor.

Measurements made using the integrated Gigabit Ethernet ports on various single-board computers (SBCs) demonstrate the severity of the host-loading problem. Managing layer 2 (MAC) processing on the SBC is the system controller or the onboard Ethernet controller. The layer 3 and layer 4 protocol stacks run on the processor, typically a PowerPC.

For a range of packet sizes, throughput is good, up to 60 percent of maximum line rate. Receiving packets however, represents a significant burden on the host. At speeds slower than 300 megabits per second, host loading is 50 percent or more. At higher throughput levels, host loading increases to 80 percent or more.

Other than throttling back throughput, a practical method of addressing protocol-processing overhead is to use protocol offload. A TCP/IP offload engine (TOE) is implemented using dedicated processing resources based on either hard-wired implementations (commercial ASICs or FPGAs) or commercial processors.

Hard-wired, parallel-processing techniques can offer the highest throughput, but have the disadvantage of being based on commercial silicon developed for the iSCSI market. This has several disadvantages. First, real-time operating-system (RTOS) drivers may not be available. Second, the products available on the market may not be rated for extended-temperature operation. End users most likely will be subjected to commercial market lifecycles.

Processor-based approaches cannot provide wire-speed performance, but they can offload the single-board computer as efficiently as ASIC controllers. Using a standard processor provides several advantages, including long lifecycle support, ability to meet extended temperature requirements, and flexibility to implement additional protocol layers and address custom protocols.

To be clear, most commercial Ethernet controllers include offload features-typically TCP and UDP checksum calculation and TCP segmentation offload. While these features improve performance, they provide only limited relief to the host processor. More-sophisticated controllers may offload data-path processing, but rely on the TCP/IP protocol stack resident on the host for exceptional conditions such as receiving out-of-order packets or expiration of a TCP timer.

These partial offload approaches require the host operating system to provide the necessary hooks into the protocol stack. Operating systems commonly used in commercial and storage applications, such as Windows and Linux, provide partial offload hooks. Real-time operating systems such as VxWorks, Integrity, and LynxOS do not recognize the partial offload features common to commercial controllers. The drivers for network adapters that use these controllers will function, but without offload.

The driver implementation can influence the usefulness of the TOE function. The industry-standard method of connecting a network application to the TCP/IP protocol stack is the BSD sockets interface. A socket is a means of accomplishing interprocess communications. As long as the host and network adapter maintain a socket interface, standard network applications such as FTP, Telnet, NFS, and CIFS will operate transparently.

The drivers for some TOE implementations use nonstandard interfaces between the application and the offloaded stack. This may be acceptable for custom-developed network applications, but is incompatible with the use of COTS applications. Even when a TOE uses a socket interface, special parameters can be passed in the socket command used to create the socket. These parameters are used to identify the offloaded stack.

This approach makes it impossible to use standard network applications without modification and recompilation. For full offload to be effective, the driver implementation must maintain transparency, such that the application is unaware that protocol processing has been transferred to the TOE.

The requirement for TOE and its implementation approach are application dependent. New designs that can take advantage of the latest dual-core processors or use multiprocessor SBCs may have adequate bandwidth to handle TCP/IP processing on their own. Space- or power-limited applications, such as sensors, data loaders, and remote interface units may not use COTS computer boards with the fastest processors. Programs retrofitting systems with Gigabit Ethernet may need to maintain existing processor architectures to limit firmware impact. These applications are good fits for a transparent TOE implementation.

Several factors need to be considered when making the choice between processor and ASIC-based TOE controllers. If maximum throughput is a primary consideration, then a controller approach can be the best fit. TOE controllers use custom processor cores and distributed memory resources to boost performance. End-to-end throughput will be limited by the design of the interface that moves data across the PCI/PCI-X bus between the network adapter card and the host. The performance difference between processor and controller-based TOE will vary with driver implementation and the host operating system choice.

Processor-based TOE can be the only choice for applications that require modification of the TCP/IP protocol stack. Custom stacks are used to enhance quality of service, reliability, and performance for demanding applications. Commercial controllers provide little support for customization because they were designed to meet general market requirements. Likewise, commercial TOE controllers are not IPv6 capable as mandated by the U.S. Department of Defense. Processor-based TOE uses a software stack, providing a straightforward upgrade path to IPv6.

Military users of TOE must also consider lifecycle support. Since shifting program requirements can lead to design changes, there are benefits to choosing a flexible architecture. General-purpose processors provide the ultimate flexibility, but ASIC-based TOE controllers can offer some degree of firmware adaptability. It is critical to determine who controls the intellectual property (IP) for the TOE implementation.

System designers are already thinking about 10 Gigabit Ethernet for backbone connections between switches and as a backplane for loosely coupled processors, which introduces new challenges to be addressed for military applications.

Today, fiber-optic physical media is required for multigigabit links. Existing transceivers used for 10G Ethernet such as XENPAK, XPAK, and XFP are bulky and power hungry compared to the SFF and SFP optical transceivers or the ubiquitous 1000Base-T interfaces used at Gigabit speed. IEEE working groups 802.3an and 802.3ap are close to ratifying standards for 10G-Base-T interconnect and 10G Ethernet copper backplane physical layers. These activities may be key enablers for military use of 10G Ethernet. Equally important will be the development of next-generation TOE. At 10G speeds, the fastest host processors have no chance of processing TCP/IP. Hardware assist will be mandatory.

While Gigabit Ethernet is not without its critics, it has been selected for UAV programs, helicopter and transport aircraft upgrades, various Navy applications and the U.S. Army’s Future Combat System (FCS.)

Steve Rood Goldman is product manager for high-speed networking components at Data Device Corp. in Bohemia, N.Y.