Developers of real-time embedded software take aim at code complexity

Safety, security, reliability, and performance dominate the discussion of real-time embedded operating systems and middleware as software developers confront the new frontier of multicore and multiprocessor architectures with smaller size, lighter weight, and lower power consumption.

By John Keller

Computing resources in military and aerospace electronics applications such as avionics, radar, and wide-area networking are becoming ever-more complex. Systems designers not only are forced to use multiprocessing and multicore processors to save on space, weight, and power consumption, but they also have to make computers run many different programs simultaneously-from flight- and mission-critical tasks to Web browsers-all on the same chip. Systems engineers also have to make sure their systems cannot be hacked, and cannot spiral into catastrophic crashes.

What’s a software programmer to do?

Today’s developers of real-time embedded software face some of the largest, most complex software architectures they have ever seen, and this trend of ever-growing size of embedded computing hardware will make their tasks far more challenging in the future. At the same time they face customer requirements for safety, and increasingly for security. Safety means that pieces of system software cannot corrupt others, or crash the entire system altogether. Security means that hackers cannot break into and destroy the system.

“The complexity explosion in software is exponential,” says David Kleidermacher, chief technology officer at Green Hills Software in Santa Barbara, Calif., which specializes in real-time embedded operating systems and software-development tools. The Green Hills flagship product is the real-time operating system called Integrity.

“In the 1970s the average car had 100,000 lines of source code,” Kleidermacher explains. “Today it’s more than a million lines, and it will be 100 million lines of code by 2010. The difference between a million lines of code and 100 million lines of code definitely changes your life.”

Among military applications, the U.S. Army’s Future Combat System (FCS)-what is to be collection of manned and unmanned land vehicles, as well as unmanned air vehicles and a robust communications infrastructure-is one of the best examples of growing software complexity.

“Estimates for FCS will be 60 million lines of code developed, reused, or integrated by more than 100 contractors,” points out Rob Hoffman, vice president and general manager of aerospace and defense at Wind River Systems in Alameda, Calif. FCS program managers see that this is a massive software-development program, Hoffman says. “The program size is in the $230 billion to $300 billion range.”

The onslaught is coming at software companies from several directions. Embedded-systems designers are under tremendous pressure to reduce the size, weight, and power consumption of their computing hardware to accommodate a brave new world of applications on small autonomous vehicles, wearable computers, and smart guidance and navigation. These requirements are leading them to multiprocessing and multicore processors that handle several tasks simultaneously in innovative new ways.

“Semiconductor vendors are getting more performance with lower power and lower chip count with multicore architectures. There is more than one core on the same die,” says Tomas Evenson, chief technology officer at Wind River Systems.

Multicore processor architectures, for the most part, can be considered the leading edge of system-on-a-chip technology. Different portions of the same chip handle processing tasks independently and in parallel, which offer systems designers not only vastly more performance and functionality than they have had in the past, but also reduced size, weight, and power consumption of embedded computers.

“The trends are for more compute power-more MIPS per watt,” says Grant Courville, director of worldwide applications engineering at QNX Software Systems in Ottawa. “There is a huge move to multicore because you can get close to the same computer performance at a fraction of the power.

“Complexity has always been there, but multicore is accelerating that,” Courville continues. “Until now functionality has been divided on a per-slot basis, but now you can actually do more per slot. As a result, application density per slot is going up.”

These kinds of technology advancements have a direct bearing on software development. Put simply, an increasing number of small, complex computer-hardware architectures means there is a need for vastly more software to run them. “We have an implosion of hardware, and an explosion in software,” points out Robert Day, vice president of marketing for LynuxWorks Inc. in San Jose, Calif.

Software complexity

Like it or not, software providers have to find ways to deal with multicore and multiprocessor embedded computing architectures. Consider a passenger jetliner. In years past, the aircraft had flight-control computers that ran only flight-critical software necessary to keep the plane safely in flight. These tasks were considered far too important to share the computer hardware with other software that might not be crucial for keeping the plane in the air. Additional avionics tasks such as navigation, communications, or passenger entertainment, ran on separate computer systems.

Today, aircraft designers no longer have the luxury of operating several computer systems; there just is not enough room on the platforms as the need grows for additional functionality. To conserve size, weight, and power, they are forced to run a wide variety of applications, such as flight control, communications, and navigation, on the same computer.

“Let’s take the Boeing 747 cockpit, for example. That airframe is close to 40 years old. Today there are many features that were not on the first models. The physical cockpit has not changed in size, but they need to shoe-horn things in to improve functionality; now resources are shared,” says Todd Brian, product marketing manager for the embedded systems division of Mentor Graphics Corp. in Wilsonville, Ore.

“Rather than a discrete function, one hardware platform does more than one task; you have to do multiple software systems on a single hardware platform” says LynuxWorks’ Day. “Hardware consolidation from a software standpoint means multiple applications are using the same processor and/or peripheral hardware.”

As a result, one of the chief challenges for real-time embedded software developers is “supporting multiple processors in a more elegant way,” Day says.

Basing products on the open-system Linux operating system helps LynuxWorks users attack new multicore and multiprocessing systems by providing a way to reuse relatively old software code and also to continue using existing software programs that are appropriate to the tasks at hand. “Our adherence to open-systems standard software helps our customers run off-the-shelf software as well as their proprietary applications,” Day says.

The challenges of rising system complexity for software developers cannot be understated. “There is a movement to more complex systems, and the operating system is forced to take on a larger role in managing that complexity,” says Green Hills’s Kleidermacher.

“We have passed a critical juncture where a new paradigm is required,” Kleidermacher continues. “You get to a certain size of the software where your odds of getting a really serious error are too high. We have to change the whole rules of engagement.”

To enable the Integrity real-time operating system to handle these issues, Green Hills engineers are improving their software’s support to multicore processors, and shortly are set to introduce support for symmetric multiprocessing.

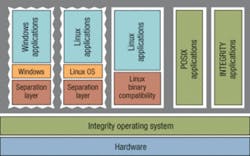

Green Hills engineers also are investigating the use of “virtual machines”-an approach in which software is “fooled” into behaving as though it is the only program running on the processor. In reality several different programs are running on the processor, but none of the programs running are aware of the others; no code interaction is involved.

Some engineers refer to the different processing nodes of multicore architectures as “offload engines” that can handle computing tasks that are not necessarily part of the core processing task at hand, but that are nonetheless crucial to accomplishing what needs to be done.

“On the same chips with the main processors are adjacent offload engines. That is driving the architecture of our real-time operating system and middleware,” says Wind River Systems’s Hoffman. Wind River’s flagship real-time operating system is called VxWorks.

Operating-system security

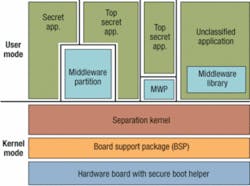

Companies that specialize in real-time embedded operating systems and middleware are confronting these challenges in different ways. Most agree, however, that isolating the operating system or operating-system kernel in some way from other software is key to security. This approach is often necessary to ensure the software’s ability to avoid corrupting application source code and to handle different levels of security, ranging from access to anyone to the highest levels of secrecy.

The idea is to segregate not only the operating system from the application code it runs, but also to make sure that different tasks the operating system runs are kept separate from one another.

“More and more companies are seeking to use third-party software in the systems, and you really want to make sure that third-party code can’t bring down your entire system, even if an error is benign,” says Brian of Mentor Graphics.

“Making the kernel bullet-proof-where application code cannot bring down the system or the integrity of the kernel-refers to the partitioning of data and tasks so that one task doesn’t have to communicate or have visibility into other tasks,” Brian says. “The task should have no knowledge of any other part of the system.”

Mentor’s real-time operating system is called Nucleus, and is for applications such as aircraft, navigation aids, sophisticated radios, rail transportation, medical instruments, and hazardous chemical manufacturing. “The kernel has evolved along with middleware to where more and more people have their hands in it,” Brian says.

The Neutrino real-time operating system from QNX, for example, is a micro- kernel that, with other software components and middleware, enables users to scale up from small handheld devices with ARM-type processors with relatively low power and speed to other, larger applications, Courville says.

Applications do not link directly to the microkernel so as to safeguard the kernel and other software components from inadvertent or intentional corruption. “The more you can keep outside the kernel, the better,” he says.

“The key thing about linking anything into the kernel is it gets access to anything in the kernel, so there’s no protection there,” Courville says. “The microkernel architecture also makes it simpler to do upgrades by downloading new drivers. It’s more flexible and dynamic because the new upgrades are operating outside the kernel.”

QNX also is introducing an operating system product with adaptive partitioning that enables users to set the number and sizes of operating system partitions. “You can say this partition gets 20 percent of my CPU and 30 percent of my memory, and no more,” Courville says. In addition, “If in that first partition I use only half the resources allotted, we will not spin and waste the CPU; we will allow other things to run.”

One of the most well-known proponents of operating-system partitioning is Green Hills Software. “People use these devices to partition the components and rely on the operating system to limit potential damage to keep it from spreading to other parts of the system,” says Green Hills’s Kleidermacher.

Integrity, Kleidermacher says, represents a new paradigm in how to build an embedded system. “We can guarantee that a process cannot corrupt another process, and offer guaranteed resources to parts of the system,” he says. Green Hills engineers refer to partitions in their software as “padded cells.”

This approach also adds flexibility by enabling systems to run several operating systems simultaneously and safely, which is a plus in helping systems designers reuse existing software code.

“We have the ability to run the Linux OS unmodified, or Windows on top of Integrity with our padded cells,” Kleidermacher says. “I can have a fully partitioned Windows or Linux environment that can do whatever it wants. It can be completely protected but still running on the same processor with Integrity.”

For LynuxWorks, security has a lot to do with communications within software. The Lynx Certifiable Stack (LCS) is a stand-alone TCP/IP software communications stack for safety-critical systems like avionics and communications. It is certifiable to the DO-178B level C safety certification.

“The communications part has become a lot more complex,” says LynuxWorks’s Day. “The needs for a secure networking stack, in addition to an operating system, are becoming more relevant.”

Wind River’s Hoffman also points to the need to isolate one application from another, not only to prevent cross-corruption, but also to enable multilevel security-or the ability for users of different security clearances to access the system and retrieve only the data for which they are authorized.

“Because of the GIG [Global Information Grid], everything is going to be connected to achieve better situational awareness,” Hoffman says. Every element the commander deals with must be connected, from warfighters, to munitions, to devices, planes, and ships. The fact that everything is connected means there is a dangerous opportunity for the intermixing of information in inappropriate ways.”

Wind River engineers also are investigating how to build software architectures with safe communications links between operating systems. That goal, however, is somewhat far off. “It’s still in its infancy,” Hoffman says. “It’s not clear how to do communications between security operating systems. Remember, also, that a secure operating system only solves 10 percent of your problem.”

Secure systems

The next step beyond safe software, which guards against inadvertent code corruption in complex software architectures, is secure software, which guards against the intentional corrupting of software by malicious hackers, or even by national enemies.

Most real-time embedded software developers agree that the ability to build safe software is the foundation on which to build secure software.

“A pedigree in safety systems helps you move into secure systems,” says LynuxWorks’s Day. “Secure software includes layers on top of the safety system. We are essentially using our expertise in safe systems to do this, rather than reinvent the technology.”

Mentor Graphics engineers plan to announce a security enhancement with security extensions to the Nucleus Plus operating system. In addition, the company relies on existing security features such as encryption in the Nucleus software. While the DO-178 software standard is a major benchmark of military and aerospace software security, Mentor officials are adhering to the industry IEC-61508 standard.

Military and aerospace systems designers who need to approach DO-178B-level security generally are comfortable with IEC-61508, Brian says.

Real-time middleware offers the next generation of systems interoperability

The so-called “middleware” segment of the software industry may be in its infancy for real-time embedded military and aerospace applications, yet middleware has the potential to kick-start a new generation of systems interoperability.

In fact, middleware offers the possibility of creating a transparent and seamless interface between computer hardware and peripherals, application software code, and real-time operating systems, and eventually may replace operating systems as the interface layer between computer hardware and application software, experts predict.

The idea of middleware is to provide a standard way for hardware and software providers to configure their products so that the widest variety of hardware and software possible can work together seamlessly.

Military systems integrators particularly like the notion of middleware. It enables them to use the best technology from industry without the need for long-term relationships with only a handful of providers.

“Middleware provides the isolation layer between application software and the computing layer,” explains Bob Martin, director of software for the Zumwalt-class destroyer program at Raytheon Integrated Defense Systems in Tewksbury, Mass.

Raytheon is the mission systems integrator for the future U.S. Navy Zumwalt-class destroyer, also known as DDG-1000. As such, Raytheon essentially is responsible for most of the electronic and electro-optic systems and subsystems that will go aboard the future warship.

Middleware was particularly useful for Raytheon engineers when for their integration experiments they sought to migrate computer hardware and software from Sun workstations and the Solaris operating system to IBM servers and Red Hat Linux. “Middleware helped us switch out Sun for the IBM/Linux combination is just a couple of days,” Martin says. “It allows that isolation layer so we are not locked into just one hardware vendor.”

It is that isolation layer that is so attractive. “Middleware fundamentally enables people to write applications that span several computing nodes,” explains Steve Jennis, senior vice president of corporate development at middleware provider PrismTech Corp. in Burlington, Mass.

Middleware for computer enterprise applications, where the need for real time is at a minimum, has been in place for several years, but middleware is just beginning to come into its own for real-time embedded military and aerospace applications.

“Middleware essentially is the guts of distributed applications; it’s the plumbing, and you build your applications on top of that,” says Gordon Hunt, chief applications engineer at middleware provider Real Time Innovations (RTI) in Santa Clara, Calif.

Middleware, Hunt explains, is infrastructure software that deals with data and/or message transfers. “At a primitive level you open a connection, send a message, and the recipient gets it,” he says. “Middleware steps in and does information transition, formatting of data, determines where the message needs to go, and who needs it.”

Middleware typically is based on one of two primary software standards: CORBA, short for Common Object Request Broker Architecture, and DDS, which is short for Data Distribution Service. “Essentially these are two ways of reaching similar but fundamentally different ways of integration between applications,” explains PrismTech’s Jennis. Both standards, he says, provide “write once, run anywhere” software capability.

Essentially middleware enables systems designers to decouple their software applications because middleware handles the semantics of how software tasks will be achieved. “Middleware lets people concentrate on their applications,” Hunt says. “Systems are amazingly complex these days.”

RTI has been doing real-time middleware since 1995 after experts sought to commercialize middleware technology developed at Stanford University. “We have always been designed for fast real-time distribution of data,” Hunt says. “Our focus is on getting things there fast and being very efficient with the operating system.”

Middleware may provide a far richer resource for systems designers, however, than simply providing a software/hardware interface. Instead, software-applications designers can consider middleware to be the target platform, rather than a particular computer processor or operating system.

“When the middleware becomes the platform, you can model to run on it,” Jennis says. “It is vendor- and hardware-independent, by targeting to the middleware from the chip and operating system. If at the model level your code is processor independent, you can port that application forward to the next generation of hardware when you need to.”

The emergence of programmable hardware, such as field-programmable gate arrays (FPGAs), also may put a new twist on middleware, Jennis says.

“When the hardware is more programmable, will the operating system become firmware in the chip?” Jennis muses. “There is nothing to stop a real-time operating system from becoming an IP core on a programmable device. There is also nothing to stop a middleware from becoming an IP core on a programmable chip. The real-time operating system could license to the chip vendors and would be a component of the hardware.

“The implications of that could be quite dramatic,” Jennis continues. “There could be the same thing in embedded systems this decade as what happened with Java in the last decade, when enterprise applications developers wrote software in Java and didn’t care where it ran.”

Open Architecture Business Model to unleash the innovation of small companies

The U.S. Navy is pursuing an innovative new way of contracting for technology goods and services that not only may free prime systems integrators from locking themselves into a small number of subcontractors, but also to make maximum use of the technological innovation made by small suppliers.

It is called the Open Architecture Business Model, and is being used on the Navy Zumwalt-class destroyer program, on amphibious transport dock ships, and is under consideration for the nation’s fleet of attack and ballistic missile submarines.

“We don’t want to lock in to any one company,” says Bob Martin, director of software for the Zumwalt-class destroyer program at Raytheon Integrated Defense Systems in Tewksbury, Mass. Raytheon is the mission systems integrator for Zumwalt-class destroyer, and is responsible for the electronic and electro-optic systems and subsystems that will go aboard.

The Open Architecture Business Model allows Raytheon to run continuous competitions for electronic goods like computer servers and software middleware. The company runs a new competition for software every two years and for computer hardware every four years.

“When nobody is locked in for life you have to come in with innovation and competitive cost,” Martin says. “People that lose competitions know they can get another chance not too far down the line; they are not locked out forever. Keeps pressure on the incumbent to do better than a good job to keep the business. We think it makes sense, and gets the best value for the Navy, and keeps cost affordable.”

Software that helps enable systems designers to write application code that is independent of the hardware on which it will run is a key component of the Open Architecture Business Model, Martin says.

On the Zumwalt destroyer program, Raytheon us using 1,400 software engineers across 33 companies to write about 6 million new lines of application software, as well as using commercial off-the-shelf (COTS) hardware and software components from 43 COTS vendors. In this environment, “the Navy wanted something that will go on for the next 40 to 50 years,” Martin says.

The Open Architecture Business Model will help the Navy and its systems integrators use the best available technology from industry without tying themselves into particular contractors, software operating systems, or computer hardware, he says.