The brave new world of embedded computing backplanes and chassis

Military embedded computing systems based on bus-and-board architectures are advancing quickly on three fronts: data throughput; thermal management; and standards-based designs. These three technology trends promise to bring the latest advances in high-performance computer processors to demanding applications like electronic warfare (EW), signals intelligence (SIGINT), and radar signal processing.

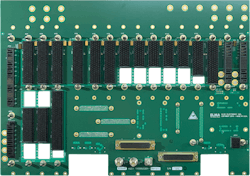

Ruggedized embedded systems enclosures certainly are important, but do not represent the latest trends in embedded systems chassis and databuses, which increasingly reflect new open-systems standards, blended optical and copper interconnects, and electronics cooling approaches to deal with computer boxes that can generate in excess of 2,000 Watts.

It’s all part of the latest trends in databuses and enclosures that will produce some of the most powerful embedded computing systems ever developed, which will be designed to accept rapid upgrades, accommodate rapid changes in technologies, and bring server-grade computing to ruggedized digital signal processing systems aboard aircraft, on ships and submarines, and on the latest armored combat vehicles.

Data throughput

Many military embedded computing systems are developed today using the OpenVPX standard of the VITA Open Systems“What’s really been driving the last part of last year is moving data rates on three fronts — in VPX itself, in COM Express, and FMC [FPGA Mezzanine Card] — all up into much higher speeds,” Munroe says.

“Bandwidth is going up, and it can be networked,” says Ken Grob, director of embedded technology at Elma. “From a payload standpoint, there are applications where you need to send lots of data between boxes, or between a collection point in the front-end, and distribute it to payload cards.”

FMC module interconnects to carrier cards are being pushed to 57 gigabits per second to accommodate blazingly fast microprocessors. FMC modules come in two sizes: 69 millimeters wide — not much bigger than a credit card — and double-width 139 millimeters wide. These modules pack a substantial amount of computing power in relatively small spaces.

“We are seeing higher-speed backplanes; that is certainly a trend,” says Justin Moll, vice president of sales and marketing at embedded computing specialist Pixus Technologies in Waterloo, Ontario. “There also is interest in hundred-gigabit speeds.”

Moving to that kind of data throughput can require use of the RT 3 connector on the backplane — a high-performance iteration of the VPX backplane databus connector, Moll says. “It has the pin paths that are smaller, and that helps with signal integrity.”

Optical interconnects

Such speeds may be approaching the limits of copper interconnects, and in some cases will require use of optical fiber.“As data rates get faster and faster, we are outpacing our ability to run over copper, so we are looking at optical fiber,” echoes Chris Ciufo, chief technology officer and chief commercial officer at General Micro Systems (GMS) Inc. in Rancho Cucamonga, Calif.

Among the enabling technologies facilitating a move to optical fiber interconnects is the notion of apertures in electronics enclosure designs, says Elma’s Munroe. Apertures are cutouts that accommodate coaxial and fiber optic connectors.

“Apertures is a new concept in VPX, where we have some standard cutouts on the backplanes,” Munroe says. “The acceptance of apertures helps move data through a Eurocard backplane, and to move cables off the front of the card, and move rugged applications of RF and optical cables onto the rear of the cards.”

Use of apertures is described in the ANSI VITA 67.3 standard, Munroe explains. There are about five different apertures, and the three most important are the half-size D aperture, the C full-size aperture, and the E full-plus-half-size aperture. “Industry has moved to at least one-inch slot width, and the larger apertures allow more coaxial and fiber optic contacts.”

“The range of connectors in an aperture-type architecture can vary,” Munroe says. “It allows a lot of flexibility. The connectors can accommodate a mixture of optical and RF, and the user will find it easy to change these modules. The apertures are only held by a couple of screws and alignment pins.”

The spirit of this design approach blends standard and custom design, Munroe points out. “The standard tells you the size of the aperture in the backplane, and where the mating is, but the number of contacts can be done to suit the equipment, and can be changed easily in the backplane,” Munroe says. “We can have optical signals going slot-to-slot; it does not require backplanes for these high-speed lanes. They are for optical or RF point-to-point interconnects, to connect to cards or antennas.”

Primary applications for aperture-type designs are EW, radar, jamming, different types of frequency hopping, and software-defined radio. The signals go out through the rear of the backplane,” Munroe says.

These speed increases didn’t just happen overnight; it’s been taking place over about the last four to five years, experts say. “We are starting to see the modules actually implemented, with the ability to make a system that is interoperable, and make it quickly,” says Elma’s Grob. “The technology is becoming real, can be adopted, and can demonstrate interoperability that can be reused and stood-up into different systems. There is momentum building.”

Thermal management

GMS’s Ciufo characterizes the thermal management challenges that today’s embedded computing designers face. “Processors are consuming more and more power, and generating more and more heat. We have been introducing rackmount servers, starting with the Intel Xeon E5, and upgraded to the new Intel Xeon Scalable processor and second-generation Scalable processors.”

Yet the heat continues to rise, he says. “Intel’s latest and greatest middle-of-the-road 20- and 24-core Scalable processors consume 150 Watts per processor,” Ciufo continues. “Our systems have two to four processors, so easily it can get to 300 Watts for only two processors and 600 Watts for four processors.”

Still, today’s leading-edge embedded computing systems rely on more than just processors. “Add two artificial intelligence cards from nVidia is another 500 Watts — 250 Watts per processor. These systems easily are consuming in excess of 2,000 Watts and more. No longer is it trivial to air-cool rackmount servers, so we have stepped-up or game.”

It’s not just GMS that must step-up its game, but also every other high-performance embedded computing designer who seeks to serve this market. “Cooling is kind of the tool kit,” says David Jedynak, chief technology officer at the Curtiss-Wright Corp. Defense Solutions division in Ashburn, Va. “There are no new physics to magically cool things. At the chassis level, the thermal design can be very focused for the type of platform, but on the board there are only a few ways we can cool them.”

For GMS air-cooled chassis and enclosures “we have taken a page out of the VITA 48 playbook, to make sure we are doing managed air flow — essentially to make certain we can cool systems that in excess of 2,000 Watts,” Ciufo says. “We are doing computational fluid dynamics to make sure the air moves where it needs to, and moves the heat out of the back of the chassis.”

GMS designers are experimenting with different kinds of heat sinks that blend conduction and forced-air cooling. “We have developed more exotic materials for heat sinks,” Ciufo says. “We used a combination of active cooling in our heat sinks themselves to make sure they are as efficient as possible. We also hired new engineers to use not just metallic alloy, but also other elements to improve the amount of heat flux you can use to get out the heat. Many of our ATR chassis are conductively cooled from the card to the chassis wall, and then convection cooled along the edge of the chassis.”

Industry standards

One year ago the U.S. secretaries of the Navy, Army, and Air Force issued the so-called “Tri-Service Memo” directing the Pentagon’s service acquisition executives and program executive officers to use open-systems standards that fall under the umbrella of the Modular Open Systems Approach (MOSA) project.

The memo mentions the Sensor Open Systems Architecture (SOSA); Future Airborne Capability Environment (FACE); Vehicular Integration for C4ISR/EW Interoperability (VICTORY); and Open Mission Systems/Universal Command and Control Interface (OMS/UCI).

SOSA, which revolves around the VITA OpenVPX embedded computing standard, focuses on single-board computers and how they can be integrated into sensor platforms. It involves a standardized approach on how embedded systems interrogate sensor data to distill actionable information.

The Pentagon-backed FACE open avionics standard is to enable developers to create and deploy applications across military aviation systems through a common operating environment. It seeks to increase capability, security, safety, and agility while also reducing costs.

VICTORY aims at military vehicle electronics (vetronics) components, subsystems, and platforms interoperability. It is for multi-vendor implementation, and is considered a critical enabler for the Assured Position, Navigation and Time (APNT) program; several programs of record require VICTORY standards. VICTORY focuses on three core areas: tactical systems capabilities; host and network system capabilities; and vehicle system and logistics capabilities.

The OMS/UCI standard concerns a common message set that enables interoperability across several different manned and unmanned weapon systems. It focuses on interoperable plug-and-play software applications that run on a wide variety of systems, and enable designers to integrate new capabilities quickly in much the same way that smart phone users download applications.

Other new and emerging standards that influence today’s chassis and backplane designs include Hardware Open Systems Technologies (HOST); Command, Control, Communications, Computers, Intelligence, surveillance and Reconnaissance (C4ISR) / Electronic Warfare (EW) Modular Open Suite of Standards (CMOSS); and Modular Open RF Architecture (MORA).

CMOSS is intended to move the embedded industry away from costly, complex, proprietary solutions and toward readily available, cost-effective, and open-architecture commercial off-the-shelf (COTS) technologies. It was started at the Army Communications-Electronics Research, Development and Engineering Center (CERDEC) at Aberdeen Proving Ground, Md.

The MORA initiative seeks to create standard RF and microwave systems modules like the embedded computing industry has standardized on circuit card form factors, electronic interconnects, backplane connectors, and electronic chassis. Like CMOSS, it also comes from Army CERDEC. MORA recognizes the embedded computing industry’s OpenVPX standards initiative as a potential model for creating open-systems RF and microwave systems standards.

With these kinds of standards, “there is a much higher chance of saying we want to upgrade a system’s capability using an open standard interface, and a higher chance you could go to the same or different vendors and have an improved card in that area,” says Curtiss-Wright’s Jedynak.

“The idea is to consolidate boxes onto cards,” says Jason Dechiaro, system architect at Curtiss-Wright. “You can virtualize in a lot of ways, such as no longer needing a SINCGARS box, but have a SINCGARS card in a box.” SINCGARS is a legacy anti-jam communications system called the Single Channel Ground and Airborne Radio System.

Industry experts, at least for now, are optimistic that standards like MOSA, SOSA, and CMOSS can enforce vendor interoperability at the bus, board, slot, and chassis level. “We see a lot of positive movement in SOSA, and its influence on various working groups in VPX,” says GMS’s Ciufo. “We want to get it right this time to have real interoperability among vendors.”

About the Author

John Keller

Editor-in-Chief

John Keller is the Editor-in-Chief, Military & Aerospace Electronics Magazine--provides extensive coverage and analysis of enabling electronics and optoelectronic technologies in military, space and commercial aviation applications. John has been a member of the Military & Aerospace Electronics staff since 1989 and chief editor since 1995.